MMPE: A Multi-Modal Interface using Handwriting, Touch Reordering, and Speech Commands for Post-Editing Machine Translation

As machine translation has been making substantial improvements in recent years, more and more professional translators are integrating this technology into their translation workflows. The process of using a pre-translated text as a basis and improving it to create the final translation is called post-editing (PE). While PE can save time and reduce errors, it also affects the design of translation interfaces: the task changes from mainly generating text to correcting errors within otherwise helpful translation proposals, thereby requiring significantly less keyboard input, which in turn offers potential for interaction modalities other than mouse and keyboard.

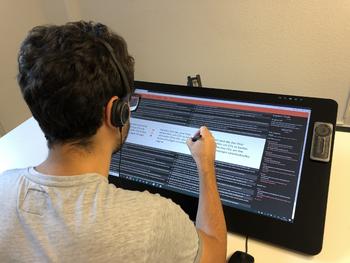

This paper therefore presents MMPE, a prototype combining pen, touch, speech, and multi-modal interaction together with common mouse and keyboard input possibilities. Users can edit the machine translation proposals by crossing out text or hand-writing words with a digital pen. Furthermore, MMPE allows the user to delete words by simply double-tapping them with pen/finger touch, or reorder them through a simple drag and drop procedure. As speech commands, the user has the option to insert, delete, replace, and reorder words or subphrases, e.g. by stating “insert A after second B”. Last, multi-modal combinations are offered, where a target word/position is first specified by placing the cursor using the pen, finger touch, or the mouse/keyboard. Afterward, a voice command like “delete” or “insert A” can be used without needing to specify the position/word, thereby making the commands less complex. A video demonstration is available at https://youtu.be/H2YM2R8Wfd8.

Our study with professional translators shows a high level of interest and enthusiasm about using these new modalities. For deletions and reorderings, pen and touch both received high subjective ratings, with the pen being even better than mouse & keyboard. Participants especially highlighted that pen and touch deletion or reordering “nicely resemble a standard correction task”. For insertions and replacements, speech and multi-modal interaction of select & speech were seen as suitable interaction modes; however, mouse & keyboard were still favored and faster. Here, participants preferred the speech-only approach when commands are simple, but stated that the multi-modal approach becomes relevant when ambiguities in the sentences make speech-only commands too complex.

Instead of replacing the human translator with artificial intelligence (AI), this paper thus investigates approaches to better support the human-AI collaboration in the translation domain by providing a multi-modal interface for correcting machine translation output.