Paper & Poster Publications at IEEE VR 2025

Paper & Poster Publications at IEEE VR 2025

The UMTL has published a new full-paper and a new poster at the international IEEE VR 2025 conference in Saint-Malo, France! The paper informs about how to enhance plant breeding with virtual reality. The poster introduces a novel haptic bracelet that simulates wind in virtual environments and was developed in the context of a Master's thesis at the UMTL.

Four Papers & Best Paper Award at CHI 2024

Four Papers & Best Paper Award at CHI 2024

Four papers with the involvement of UMTL researchers have been accepted at CHI 2024 and one of them was awarded the Best Paper Award! Check out the research projects that range from sports technology, over VR illusions, to the preparation of human spaceflight missions to the Moon!

André Zenner receives the IEEE Virtual Reality Best Dissertation Award 2024

André Zenner receives the IEEE Virtual Reality Best Dissertation Award 2024

André Zenner received the IEEE VGTC Virtual Reality Best Dissertation Award 2024 for his dissertation on haptics for virtual reality, supervised by Antonio Krüger. The award recognizes outstanding academic research and development in the field of virtual reality, with selection criteria including the significance and impact of the scholarly contributions, and the clarity with which these contributions are communicated.

The Staircase Procedure Toolkit

The Staircase Procedure Toolkit

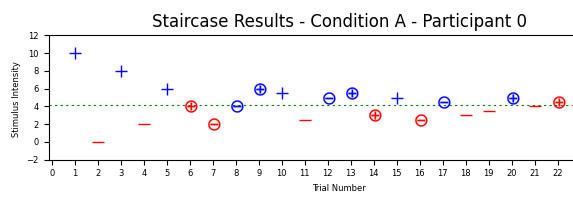

We propose a novel open-source software toolkit to support researchers in the domains of human-computer interaction (HCI) and virtual reality (VR) in conducting psychophysical experiments. Our toolkit is designed to work with the widely-used Unity engine and is implemented in C# and Python. With the toolkit, researchers can easily set up, run, and analyze experiments to find perceptual detection thresholds using the adaptive weighted up/down method, also known as the staircase procedure. Besides being straightforward to integrate in Unity projects, the toolkit automatically stores experiment results, features a live plotter that visualizes answers in real time, and offers scripts that help researchers analyze the gathered data using statistical tests.

Detectability of Saccadic Hand Offset in Virtual Reality

Detectability of Saccadic Hand Offset in Virtual Reality

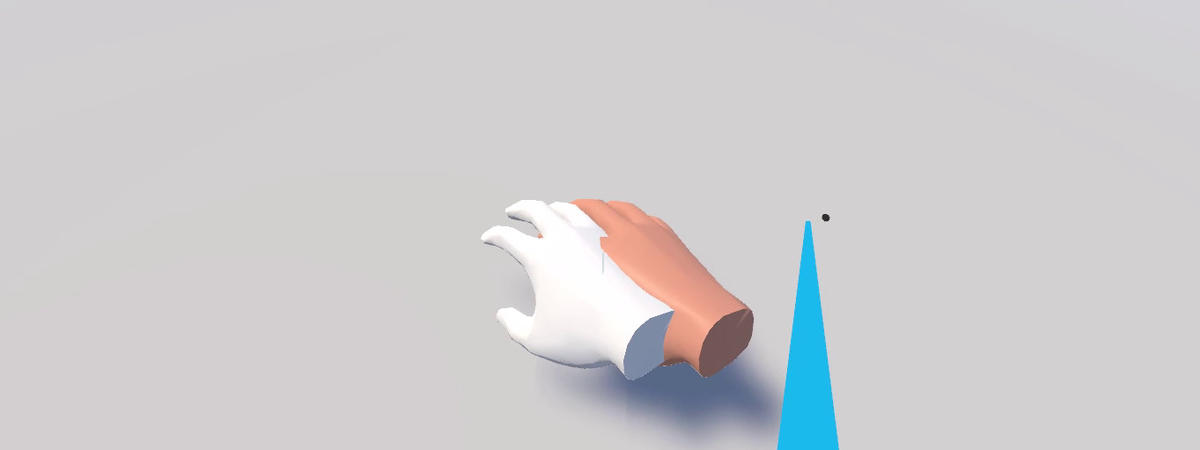

On the way towards novel hand redirection (HR) techniques that make use of change blindness, the next step is to take advantage of saccades for hiding body warping. A prerequisite for saccadic HR algorithms, however, is to know how much the user’s virtual hand can unnoticeably be offset during saccadic suppression. We contribute this knowledge by conducting a psychophysical experiment, which lays the ground for upcoming HR techniques by exploring the perceptual detection thresholds (DTs) of hand offset injected during saccades. Our findings highlight the pivotal role of saccade direction for unnoticeable hand jumps, and reveal that most offset goes unnoticed when the saccade and hand move in opposite directions. Based on the gathered perceptual data, we derived a model that considers the angle between saccade and hand offset direction to predict the DTs of saccadic hand jumps.

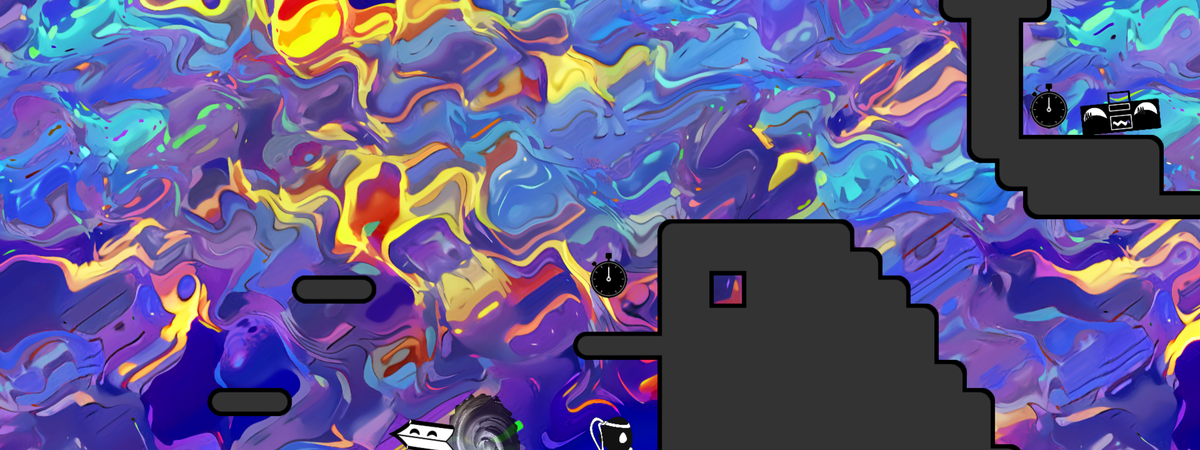

Rapid Game Development 2023 - Erstellung eines Computerspiels in einem hochschulübergreifenden, interdisziplinären Team

Rapid Game Development 2023 - Erstellung eines Computerspiels in einem hochschulübergreifenden, interdisziplinären Team

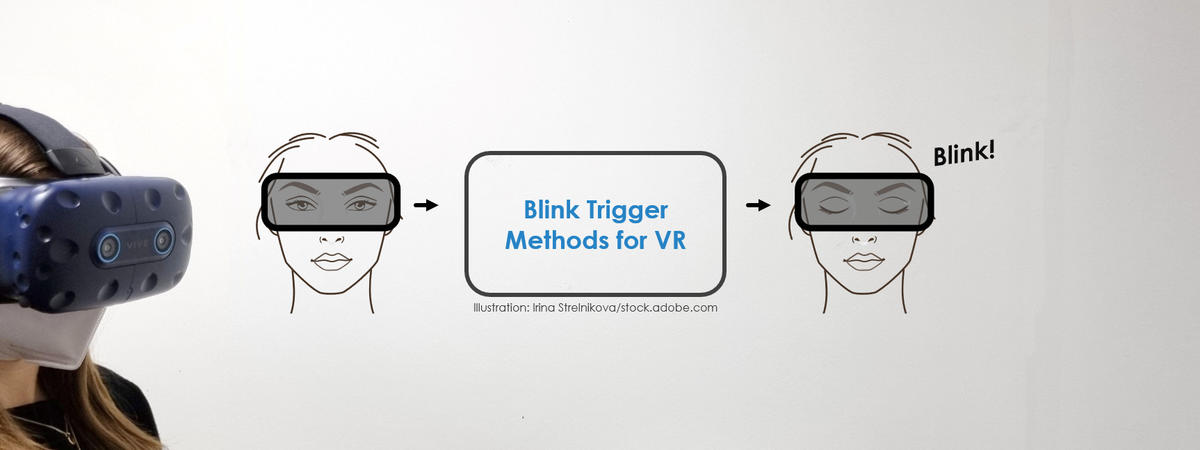

Induce a Blink of the Eye: Evaluating Techniques for Triggering Eye Blinks in Virtual Reality

Induce a Blink of the Eye: Evaluating Techniques for Triggering Eye Blinks in Virtual Reality

As more and more virtual reality (VR) headsets support eye tracking, recent techniques started to use eye blinks to induce unnoticeable manipulations to the virtual environment, e.g., to redirect users' actions. However, to exploit their full potential, more control over users' blinking behavior in VR is required. To this end, we propose a set of reflex-based blink triggers that are suited specifically for VR. In accordance with blink-based techniques for redirection, we formulate (i) effectiveness, (ii) efficiency, (iii) reliability, and (iv) unobtrusiveness as central requirements for successful triggers. We implement the soft- and hardware-based methods and compare the four most promising approaches in a user study. Our results highlight the pros and cons of the tested triggers, and show those based on the menace, corneal, and dazzle reflexes to perform best. From these results, we derive recommendations that help choosing suitable blink triggers for VR applications.

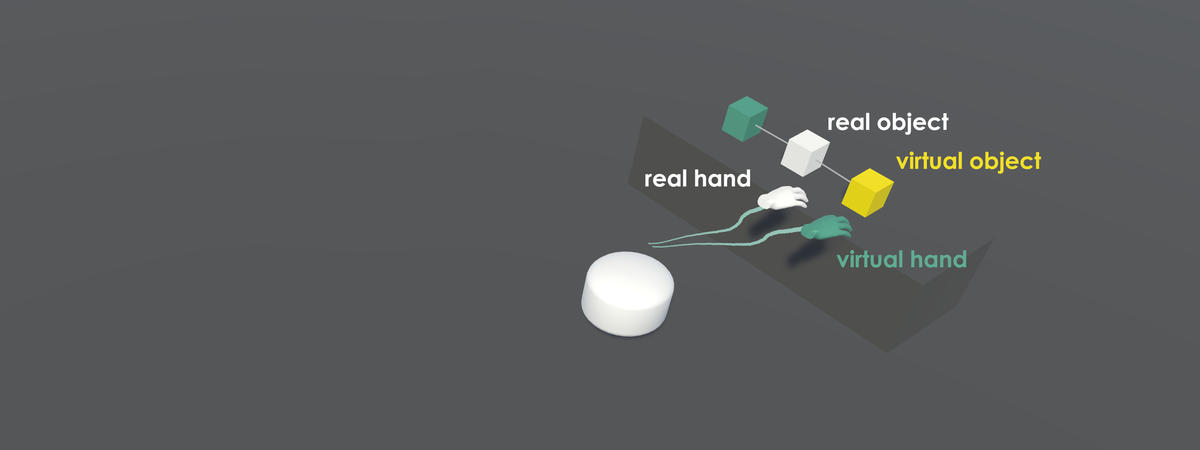

Designing Visuo-Haptic Illusions with Proxies in Virtual Reality: Exploration of Grasp, Movement Trajectory and Object Mass

Designing Visuo-Haptic Illusions with Proxies in Virtual Reality: Exploration of Grasp, Movement Trajectory and Object Mass

We investigated the role of different design variables in visuo-proprioceptive conflicts, allowing us to design visuo-haptic illusions which remain undetectable for humans.

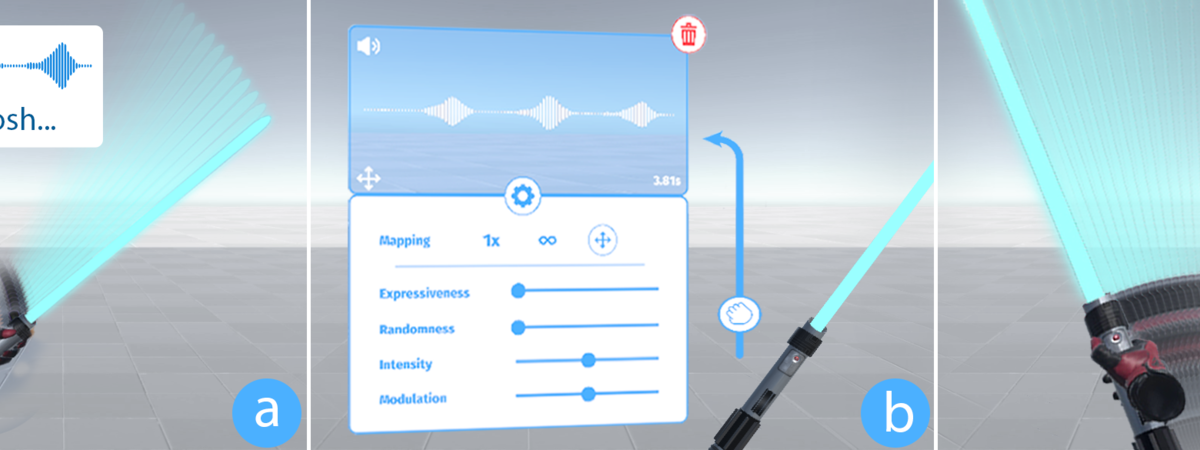

Weirding Haptics: In-Situ Prototyping of Vibrotactile Feedback in Virtual Reality through Vocalization

Weirding Haptics: In-Situ Prototyping of Vibrotactile Feedback in Virtual Reality through Vocalization

With the Weirding Haptics design tool, one can design vibrotactile feedback in a virtual environment using their voice.

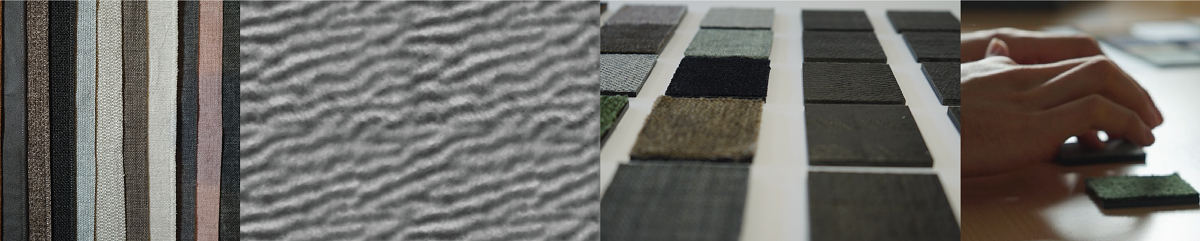

Capturing Tactile Properties of Real Surfaces for Haptic Reproduction

Capturing Tactile Properties of Real Surfaces for Haptic Reproduction

By reconstructing surface textures from a sample book using a photometric reconstruction method, we created fabricated replicas. The set of original and replica surface samples were used in a 2-part user study to investigate the transfer of tactile properties for additive manufacturing.

Hakoniwa: Enhancing Physical Gamification using Miniature Garden Elements

Hakoniwa: Enhancing Physical Gamification using Miniature Garden Elements

Gamification has been shown to increase motivation and enhance user experience. Novel research proposes the addition of physicality to otherwise digital gamification elements for increasing their meaningfulness. In this work, we look towards gardens in the context of gamification. Motivated by their positive impact on both, physical and mental health, we investigate whether gamification elements can be represented by miniature garden elements. Based on the rules of Japanese Gardens, we contribute an exploratory framework informing the design of gamified miniature gardens. Building upon this, we realize physical implementations of a waterfall, a sand basin and a water pond reflecting the gamification elements of points and progress. In an online study (N = 102), we investigate the perception and persuasiveness of our prototypes and outline improvements to support further investigations.

HaRT - The Virtual Reality Hand Redirection Toolkit

HaRT - The Virtual Reality Hand Redirection Toolkit

Past research has proposed various hand redirection techniques for virtual reality (VR) to enhance haptics and 3D interactions. In this work, we present the Virtual Reality Hand Redirection Toolkit (HaRT), an open-source framework developed for the Unity engine. The toolkit aims to support both novice and expert VR researchers and practitioners in implementing and evaluating hand redirection techniques.

Blink-Suppressed Hand Redirection in VR

Blink-Suppressed Hand Redirection in VR

We present Blink-Suppressed Hand Redirection (BSHR), the first body warping technique that makes use of blink-induced change blindness, to study the feasibility and detectability of hand redirection based on blink suppression. In a psychophysical experiment, we verify that unnoticeable blink-suppressed hand redirection is possible and derive corresponding detection thresholds. Our findings also indicate that the range of unnoticeable BSHR can be increased by combining blink-suppressed instantaneous hand shifts with continuous warping. As an additional contribution, we derive detection thresholds for Cheng et al.'s (2017) body warping technique that does not leverage blinks.

Combining Dynamic Passive Haptics & Haptic Retargeting in VR

Combining Dynamic Passive Haptics & Haptic Retargeting in VR

For immersive proxy-based haptics in virtual reality, the challenges of similarity and colocation must be solved. To do so, we propose to combine the techniques of Dynamic Passive Haptic Feedback and Haptic Retargeting, which both have been shown individually to be successful in tackling these challenges, but up to now, have not ever been studied in combination. In two proof-of-concept experiments focused on the perception of virtual weight distribution inside a stick, we validate that their combination can better solve the challenges of similarity and colocation than the individual techniques can do alone.

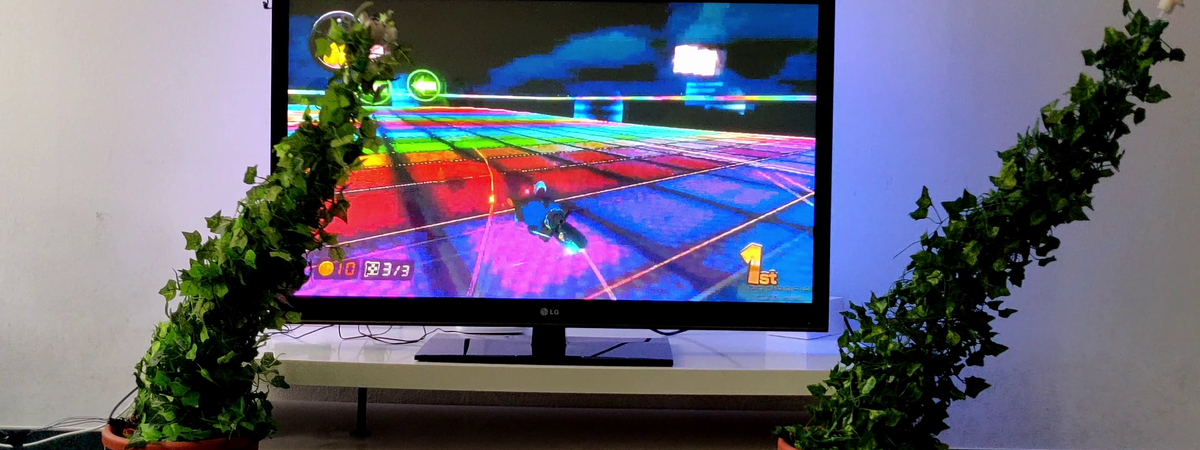

HexArcade: Predicting Hexad User Types By Using Gameful Applications

HexArcade: Predicting Hexad User Types By Using Gameful Applications

Personalization is essential for gameful systems. Past research showed that the Hexad user types model is particularly suitable for personalizing user experiences. The validated Hexad user types questionnaire is an effective tool for scientific purposes. However, it is less suitable in practice for personalizing gameful applications, because filling out a questionnaire potentially affects a person's gameful experience and immersion within an interactive system negatively. Furthermore, studies investigating correlations between Hexad user types and preferences for gamification elements were survey-based (i.e.,not based on user behaviour). In this paper, we improve upon both these aspects. In a user study (N=147), we show that gameful applications can be used to predict Hexad user types and that the interaction behaviour with gamification elements corresponds to a users' Hexad type. Ultimately, participants perceived our gameful applications as more enjoyable and immersive than filling out the Hexad questionnaire.

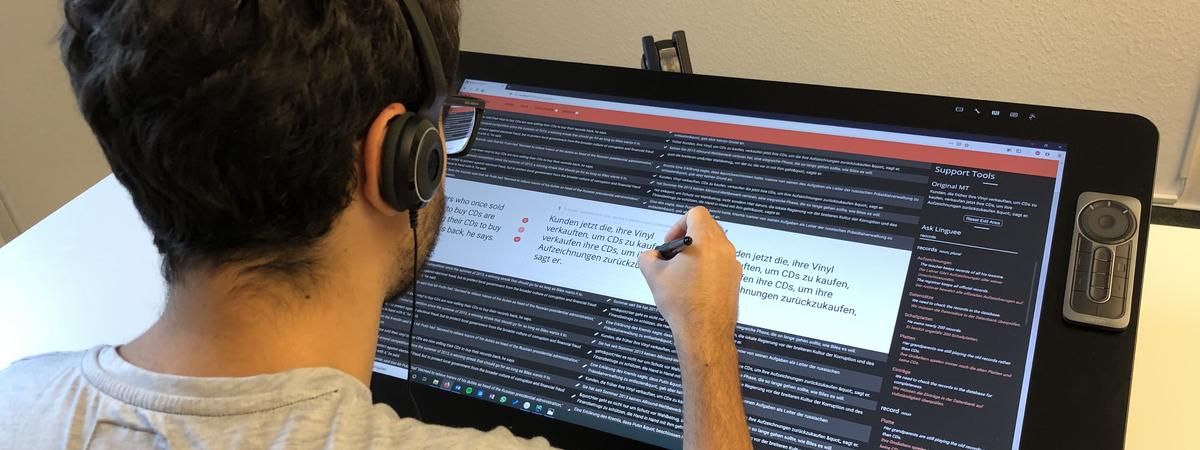

MMPE: A Multi-Modal Interface using Handwriting, Touch Reordering, and Speech Commands for Post-Editing Machine Translation

MMPE: A Multi-Modal Interface using Handwriting, Touch Reordering, and Speech Commands for Post-Editing Machine Translation

The shift from traditional translation to post-editing (PE) of machine-translated (MT) text can save time and reduce errors, but it also affects the design of translation interfaces, as the task changes from mainly generating text to correcting errors within otherwise helpful translation proposals. Since this paradigm shift offers potential for modalities other than mouse and keyboard, we present MMPE, the first prototype to combine traditional input modes with pen, touch, and speech modalities for PE of MT. Users can directly cross out or hand-write new text, drag and drop words for reordering, or use spoken commands to update the text in place. All text manipulations are logged in an easily interpretable format to simplify subsequent translation process research. The results of an evaluation with professional translators suggest that pen and touch interaction are suitable for deletion and reordering tasks, while speech and multi-modal combinations of select & speech are considered suitable for replacements and insertions. Overall, experiment participants were enthusiastic about the new modalities and saw them as useful extensions to mouse & keyboard, but not as a complete substitute.

The Jam Plotter: A Student Project for the Interactive Systems Course

The Jam Plotter: A Student Project for the Interactive Systems Course

During the course on Interactive Systems in 2019, students built an automated jam plotter to optimize your toast every morning!

The Cocktail Machine: A Student Project for the Interactive Systems Course

The Cocktail Machine: A Student Project for the Interactive Systems Course

During the course on Interactive Systems in 2019, students built an automated cocktail machine to make life easier. The version shown here was re-implemented and designed by Marie Schmidt.

Rapid Game Development - Hochschulübergreifende Gaming-Veranstaltung

Rapid Game Development - Hochschulübergreifende Gaming-Veranstaltung

AmbiPlant: Ambient Feedback for Digital Media through Actuated Plants

AmbiPlant: Ambient Feedback for Digital Media through Actuated Plants

To enhance viewing experiences during digital media consumption, both research and industry have considered ambient feedback effects to visually and physically extend the content presented. In this paper, we present AmbiPlant, a system using support structures for plants as interfaces for providing ambient effects during digital media consumption. In our concept, the media content presented to the viewer is augmented with visual actuation of the plant structures in order to enhance the viewing experience. We report on the results of a user study comparing our AmbiPlant condition to a condition with ambient lighting and a condition without ambient effects. Our system outperformed the no ambient effects condition in terms of engagement, entertainment, excitement and innovation and the ambient lighting condition in terms of excitement and innovation.

Multi-modal Approaches for Post-Editing Machine Translation

Multi-modal Approaches for Post-Editing Machine Translation

Current advances in machine translation increase the need for translators to switch from traditional translation to post-editing (PE) of machine-translated text, a process that saves time and improves quality. This affects the design of translation interfaces, as the task changes from mainly generating text to correcting errors within otherwise helpful translation proposals. Our results of an elicitation study with professional translators indicate that a combination of pen, touch, and speech could well support common PE tasks, and received high subjective ratings by our participants. Therefore, we argue that future translation environment research should focus more strongly on these modalities in addition to mouse-and keyboard-based approaches. On the other hand, eye tracking and gesture modalities seem less important. An additional interview regarding interface design revealed that most translators would also see value in automatically re-ceiving additional resources when a high cognitive load is detected during PE.

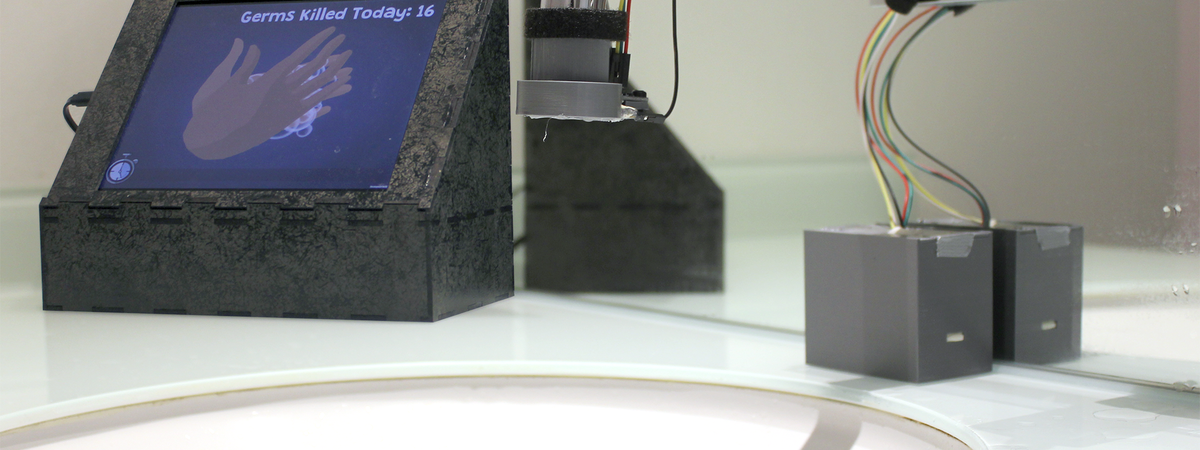

Germ Destroyer - A Gamified System to Increase the Hand Washing Duration in Shared Bathrooms

Germ Destroyer - A Gamified System to Increase the Hand Washing Duration in Shared Bathrooms

Washing hands is important for public health as it prevents spreading germs to other people. One of the most important factors in cleaning hands is the hand washing duration. However, people mostly do not wash their hands for a long enough time leading to infections and diseases for themselves and others. To counter this, we present “Germ Destroyer”, a system consisting of a sensing device which can be mounted on the water tap and a mobile application providing gameful feedback to encourage users to meet the recommended duration. In the mobile application, users kill germs and collect points by washing their hands. Through a laboratory study (N=14) and a 10-day in-the-wild study (363 hand washing sessions), we found that Germ Destroyer enhances the enjoyment of hand washing, reduces the perceived hand washing duration, almost doubles the actual hand washing duration, and has the potential to reduce the risk of infection.

Overgrown: Supporting Plant Growth with an Endoskeleton for Ambient Notifications

Overgrown: Supporting Plant Growth with an Endoskeleton for Ambient Notifications

Ambient notifications are an essential element to support users in their daily activities. Designing effective and aesthetic notifications that balance the alert level while maintaining an unobtrusive dialog, require them to be seamlessly integrated into the user’s environment. In an attempt to employ the living environment around us, we designed Overgrown, an actuated robotic structure capable of supporting a plant to grow over itself. As a plant endoskeleton, Overgrown aims to engage human empathy towards living creatures to increase effectiveness of ambient notifications while ensuring better integration with the environment. In a focus group, Overgrown was identified with having personality, showed potential as a user’s ambient avatar, and was suited for social experiments.

Immersive Process Models

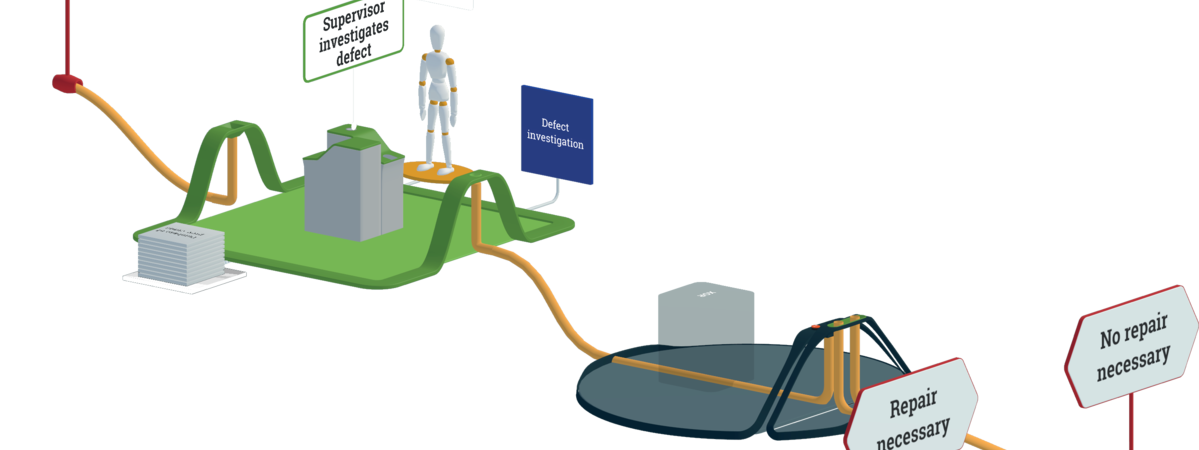

Immersive Process Models

In many domains, real-world processes are traditionally communicated to users through abstract graph-based models like event-driven process chains (EPCs), i.e. 2D representations on paper or desktop monitors. We propose an alternative interface to explore EPCs, called immersive process models, which aims to transform the exploration of EPCs into a multisensory virtual reality journey.

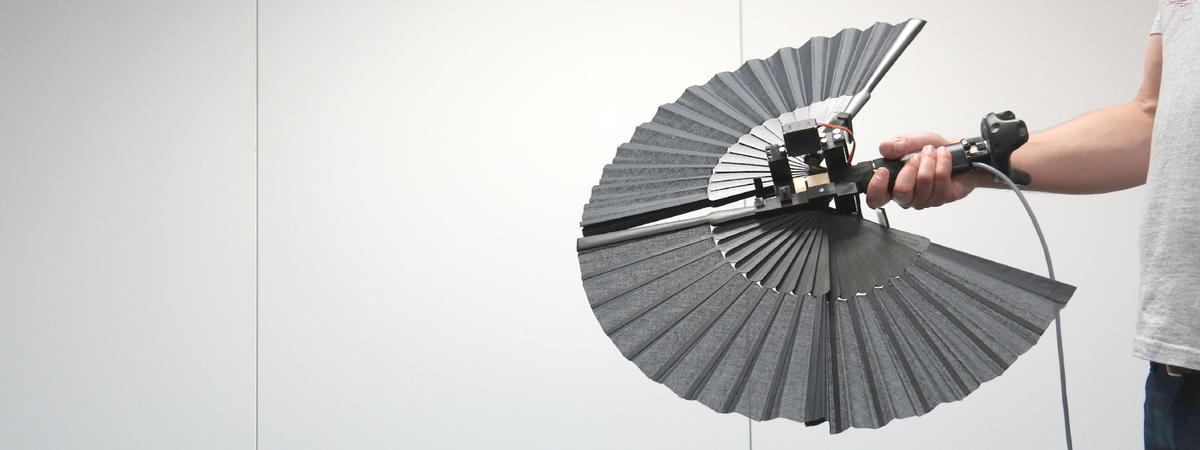

Drag:on - A Virtual Reality Controller Based on Drag and Weight Shift

Drag:on - A Virtual Reality Controller Based on Drag and Weight Shift

Standard controllers for virtual reality (VR) lack sophisticated means to convey a realistic, kinesthetic impression of size, resistance or inertia. We present the concept and implementation of Drag:on, an ungrounded shape-changing VR controller that provides dynamic passive haptic feedback based on drag, i.e. air resistance, and weight shift.

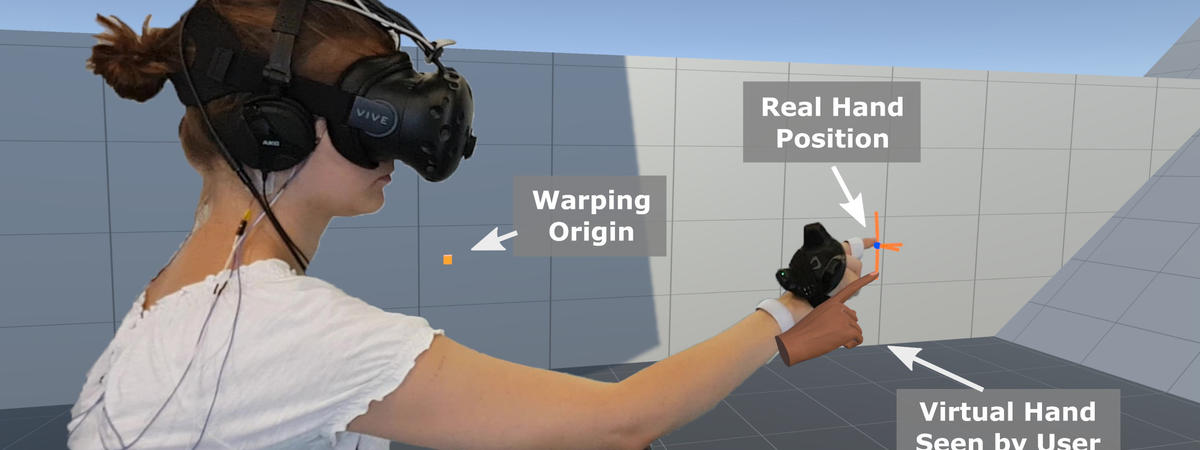

Detection Thresholds for Hand Redirection in Virtual Reality

Detection Thresholds for Hand Redirection in Virtual Reality

We present the results of an experiment on interaction in Virtual Reality. In the experiment, an interaction technique known as hand redirection was investigated. With this technique, the virtual hand of a user in Virtual Reality is displayed slightly offset from the real hand position, thereby "redirecting" the user's movement in real space. With the results of the experiment it was possible to derive how much redirection can go unnoticed by the user, even in "worst case" scenarios. This is particularly important for the development of VR applications that aim to redirect users in an undetectable way, e.g. for haptic retargeting.

Virtual Reality Polstermöbel Konfigurator

Virtual Reality Polstermöbel Konfigurator

VR bietet einen Mehrwert gegenüber dem gedruckten Produktkatalog durch räumliche und realitätsnahe Repräsentation der Konfiguration. Durch den intuitiven Aufbau können Polstermöbel leicht und in Echtzeit geplant, konfiguriert und erlebt werden. In mehreren Schritten werden Sie dabei durch die Planung geführt und haben somit schnell Ihr eigenes Polstermöbel kreiert.

Multi-modal Indicators for Estimating Perceived Cognitive Load in Post-Editing of Machine Translation

Multi-modal Indicators for Estimating Perceived Cognitive Load in Post-Editing of Machine Translation

Current advances in machine translation (MT) increase the need for translators to switch from traditional translation to post-editing (PE) of machine-translated text, a process that saves time and improves quality. In this work, we develop a model that uses a wide range of physiological and behavioral sensor data to estimate perceived cognitive load (CL) during PE of MT text. By predicting the subjectively reported perceived CL, we aim to quantify the extent of demands placed on the mental resources available during PE. This could for example be used to better capture the usefulness of MT proposals for PE, including the mental effort required, in contrast to the mere closeness to a reference perspective that current MT evaluation focuses on. We compare the effectiveness of our physiological and behavioral features individually and in combination with each other and with the more traditional text and time features relevant to the task. Many of the physiological and behavioral features have not previously been applied to PE. Based on the data gathered from 10 participants, we show that our multi-modal measurement approach outperforms all baseline measures in terms of predicting the perceived level of CL as measured by a psychological scale. Combinations of eye-, skin-, and heart-based indicators enhance the results over each individual measure. Additionally, adding PE time improves the regression results further. An investigation of correlations between the best performing features, including sensor features previously unexplored in PE, and the corresponding subjective ratings indicates that the multi-modal approach takes advantage of several weakly to moderately correlated features to combine them into a stronger model.

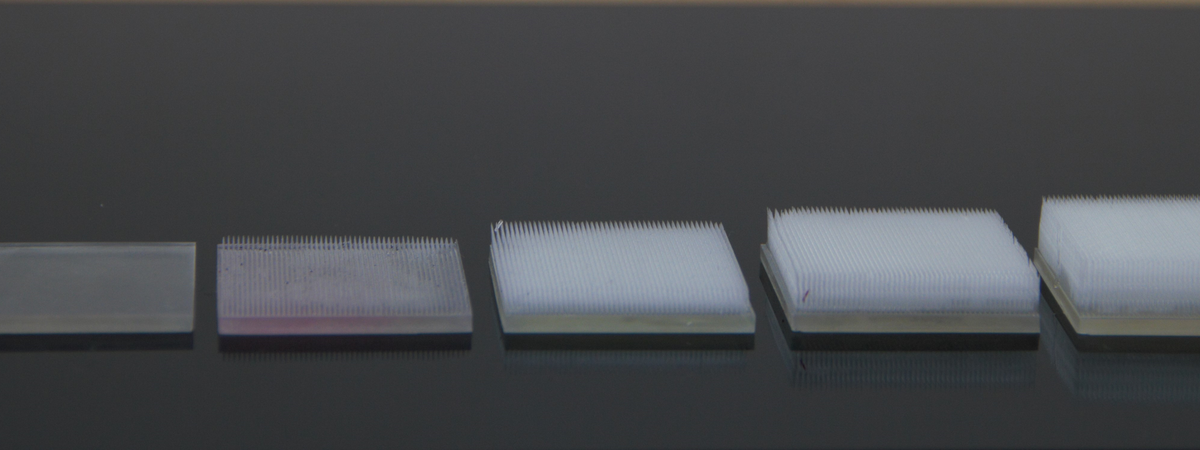

Enhancing Texture Perception in Virtual Reality Using 3D-Printed Hair Structures

Enhancing Texture Perception in Virtual Reality Using 3D-Printed Hair Structures

Experiencing materials in virtual reality (VR) is enhanced by combining visual and haptic feedback. While VR easily allows changes to visual appearances, modifying haptic impressions remains challenging. Existing passive haptic techniques require access to a large set of tangible proxies. To reduce the number of physical representations, we look towards fabrication to create more versatile counterparts. In a user study, 3D-printed hairs with length arying in steps of 2.5 mm were used to influence the feeling of roughness and hardness. By overlaying fabricated hair with visual textures, the resolution of the user’s haptic perception increased. As changing haptic sensations are able to elicit perceptual switches, our approach can extend a limited set of textures to a much broader set of material impressions. Our results give insights into the effectiveness of 3D-printed hair for enhancing texture perception in VR.

Slackliner - An Interactive Slackline Training Assistant

Slackliner - An Interactive Slackline Training Assistant

In this project we present Slackliner, an interactive slackline training assistant which features a life-size projection, skeleton tracking and real-time feedback. We chose a set of exercises from slackline literature and implemented an interactive trainer which guides the user through the exercises and gives feedback if the exercise was executed correctly. A post analysis gives the user feedback about her performance. Overall, the results of our study indicate that the interactive slackline training system can be used as an enjoyable and effective alternative to classic training methods.

Gamified Ads: Bridging the Gap Between User Enjoyment and the Effectiveness of Online Ads

Gamified Ads: Bridging the Gap Between User Enjoyment and the Effectiveness of Online Ads

We investigate the user enjoyment and the advertising effectiveness of playfully deactivating online ads. We created eight game concepts, performed a pre-study assessing the users' perception of them (N=50) and implemented three well-perceived ones. In a lab study (N=72), we found that these game concepts are more enjoyable than deactivating ads without game elements. Additionally, one game concept was even preferred over using an ad blocker. Notably, playfully deactivating ads was shown to have a positive impact on users' brand and product memory, enhancing the advertising effectiveness. Thus, our results indicate that playfully deactivating ads is a promising way of bridging the gap between user enjoyment and effective advertising.

Immersive Notification Framework for Virtual Reality

Immersive Notification Framework for Virtual Reality

Notifications in everyday virtual reality (VR) applications are currently realized by displaying generic pop-ups within the immersive virtual environment (IVE) containing the message of the sender. However, this approach tends to break the immersion of the user. In order to preserve the immersion and the suspension of disbelief, we propose to adapt the method of notification to the current situation of the user in the IVE and the messages' priority. We propose the concept of adaptive and immersive notifications in VR and introduce an open-source framework which implements our approach. The framework aims to serve as an easy-to-extend code base for developers of everyday VR applications. As an example, we implemented a messaging application that can be used by a non-immersed person to send text messages to an immersed user. We describe the concept and our open-source framework and discuss ideas for future work.

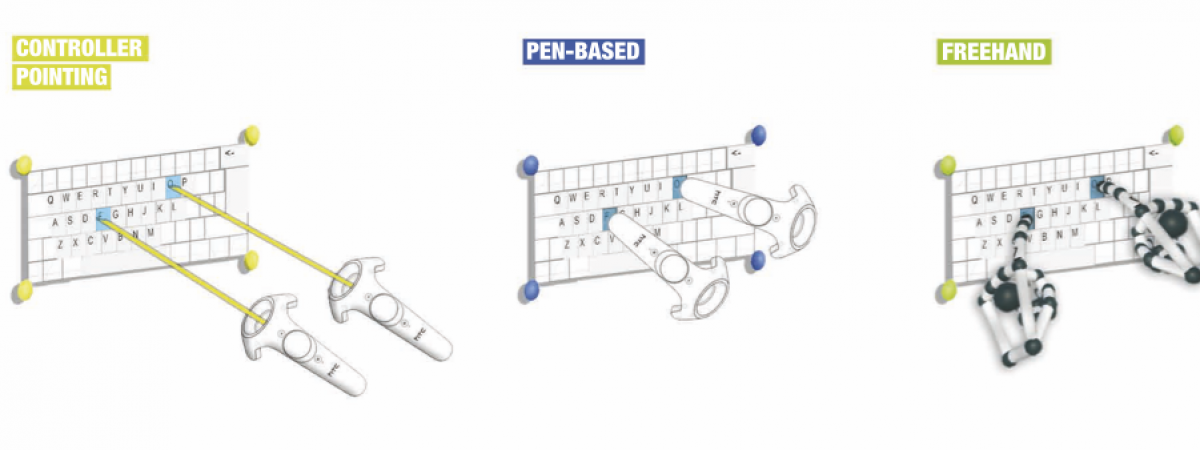

Selection-based Text Entry in Virtual Reality

Selection-based Text Entry in Virtual Reality

In recent years, Virtual Reality (VR) and 3D User Interfaces (3DUI) have seen a drastic increase in popularity, especially in terms of consumer-ready hardware and software. While the technology for input as well as output devices is market ready, only a few solutions for text input exist, and empirical knowledge about performance and user preferences is lacking. In this paper, we study text entry in VR by selecting characters on a virtual keyboard. We discuss the design space for assessing selection-based text entry in VR. Then, we implement six methods that span different parts of the design space and evaluate their performance and user preferences. Our results show that pointing using tracked hand-held controllers outperforms all other methods. Other methods such as head pointing can be viable alternatives depending on available resources. We summarize our findings by formulating guidelines for choosing optimal virtual keyboard text entry methods in VR.

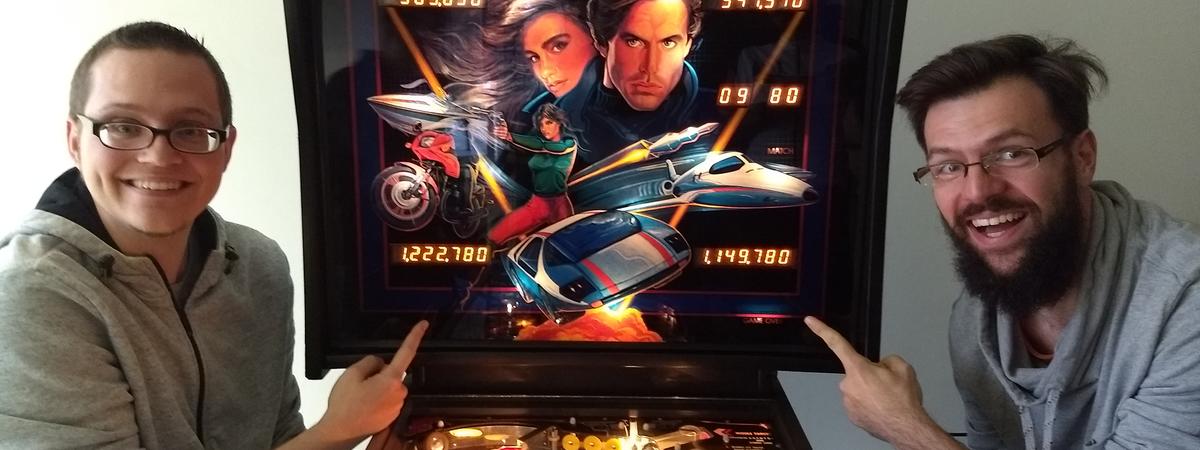

Mixed Reality Pinball

Mixed Reality Pinball

This student project aims to use Mixed Reality (VR/AR) to create a new pinball experience connecting modern technologies and original old-school pinball-machines provided by Pinball Dreams from Saarbrücken.

MMPE: Multi-modal and Language Technology Based Post-Editing Support for Machine Translation

MMPE: Multi-modal and Language Technology Based Post-Editing Support for Machine Translation

In order to ensure professional human quality level translation results, in many cases, the output of Machine Translation (MT) systems has to be manually post-edited by human experts. The postediting process is carried out within a post-editing (PE) environment, a user-interface, which supports the capture and correction of mistakes, as well as the selection, manipulation, adaptation and recombination of good segments. PE is a complex and challenging task involving considerable cognitive load. To date, PE environments mostly rely on traditional graphical user interfaces (GUIs), involving a computer screen as display and keyboard and mouse as input devices. In this research project we propose the design, development, implementation and extensive road-testing and evaluation of a novel multi-modal post-editing support for machine translation for translation professionals, which extends traditional input techniques of a PE system, such as keyboard and mouse, with novel free-hand and screen gestures, as well as speech and gaze input modalities (and their combinations). The objectives of the research are to increase the usability and the user experience of post-editing Machine Translation and to reduce the overall cognitive load of the translation task, supporting (i) the core post-editing tasks as well as (ii) controlling the PE system and environment. The multimodal PE environments will be integrated with quality prediction (QE) to automatically guide search for useful segments and mistakes, as well as automatic PE via incremental adaptation of MT to PEs to avoid repeat mistakes, in order to achieve the above mentioned objectives. The environments will be road-tested with human translation professionals and trainees and (where possible) within the partner projects in the Paketantrag (Riezler, Frazer, Ney and Waibel) and (where possible) the post-edited data captured will feed into dynamic and incremental MT retraining and update approaches pursued in the partner projects.

VRShop: A Mobile Interactive Virtual Reality Shopping Environment

VRShop: A Mobile Interactive Virtual Reality Shopping Environment

In this work, we explored the main characteristics of on- and offline shops with regard to customer shopping behavior and frequency. Thus, we designed and implemented an immersive virtual reality (VR) online shopping environment. We tried to maintain the benefits of online shops, like search functionality and availability, while simultaneously focusing on shopping experience and immersion. By touching the third dimension, VR provides a more advanced form of visualization, which can increase the customer’s satisfaction and thus shopping experience. We further introduced the Virtual Reality Shopping Experience (VRSE) model based on customer satisfaction, task performance and user preference. A case study of a first VR shop prototype was conducted and evaluated with respect to the VRSE model. The results showed that the usability and user experience of our system is above average overall. In summary, searching for a product in a WebVR online shop using speech input in combination with VR output proved to be the best regarding user performance (speed, error rate) and preference (usability, user experience, immersion, motion sickness).

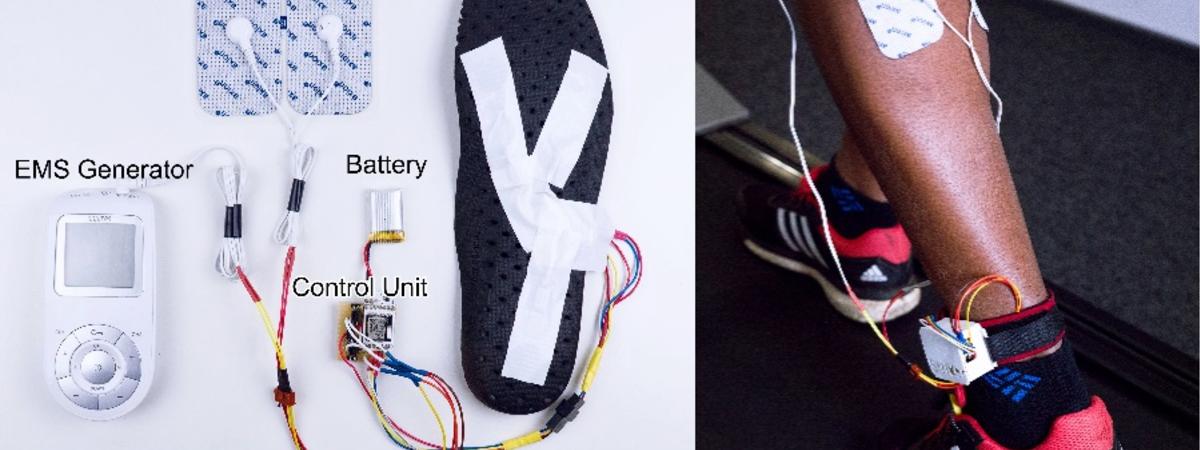

FootStriker: An EMS-based Foot Strike Assistant for Running

FootStriker: An EMS-based Foot Strike Assistant for Running

In running, knee-related injuries are very common. The main cause are high impact forces when striking the ground with the heel first. Mid- or forefoot running is generally known to reduce impact loads and to be a more efficient running style. In this paper, we introduce a wearable running assistant, consisting of an electrical muscle stimulation (EMS) device and an insole with force sensing resistors.

Shifty: A Weight-Shifting Dynamic Passive Haptic Proxy

Shifty: A Weight-Shifting Dynamic Passive Haptic Proxy

We define the concept of Dynamic Passive Haptic Feedback (DPHF) for virtual reality by introducing the weight-shifting physical DPHF proxy object Shifty. This concept combines actuators known from active haptics and physical proxies known from passive haptics to construct proxies that automatically adapt their passive haptic feedback. We investigate how Shifty can, by automatically changing its internal weight distribution, enhance the user’s perception of virtual objects interacted with.

INGAME

INGAME

We investigate “bottom-up” gamification, i.e. providing users with the option to gamify an experience on their own by an examination of the usefulness of a task managing app implementing a “bottom-up” gamification concept.

WaterCoaster

WaterCoaster

The WaterCoaster measures the amount drunk and reminds the user to consume more, if necessary. The app is designed as a game in which the user needs to take care of a virtual character.

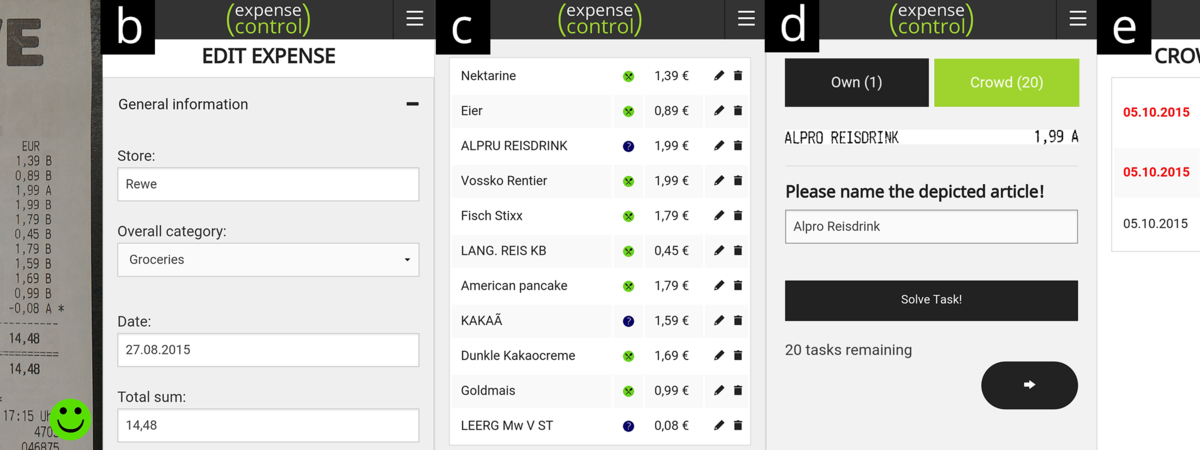

Expense Control

Expense Control

We investigate a crowd-based approach to enhance the outcome of optical character recognition in the domain of receipt capturing to keep track of expenses. In contrast to existing work, our approach is capable of extracting single products and provides categorizations for both articles and expenses, through the use of microtasks which are delegated to an unpaid crowd.

You can find more information here.